Satellite and Avionics Development

I have practical experience on small satellite and avionics design since 2012. I have been directly involved in the development of two CubeSat projects, and contributed to three launched CubeSats, FirstMOVE, MOVE-II, and MOVE-IIb, as well as the TDP-3 experiment aboard the stratospheric balloon BEXUS 22. My first contact with avionics architectures was research on the fault-tolerant avionics network standard ARIN664/AFDX, providing feedback for potential future improvements to the developers.

I joined development of FirstMOVE during NASA project phase D, and contributed to the development of flight software and the on-board computer (FreeRTOS on ARM9). After the spacecraft’s launch in 2013, I worked on ground control and mission operations, and subsequently failure diagnostics. FirstMOVE was partially successful at conducting our solar cell testing mission for Airbus Space & Defense. We could retrieve some of the necessary telemetry for achieving several mission objectives, but after about a month, FirstMOVE experienced an increasing number of reboots due to of on-board computer crashes. To the extent possible and with limited data and minimal on-orbit debug capabilities available, we conducted troubleshooting for FirstMOVE. We lost commandeering capability after two months.

Based on our experience with developing and troubleshooting FirstMOVE, we set out to plan the development of an improved successor CubeSat. However, as pre-requisite for future funding, the German space agency, DLR, obliged us to conduct a formal post-mortem analysis of FirstMOVE, together with a review of the development process and project history. Hence, we conducted an extremely thorough investigation covering the entire development cycle of FirstMOVE. The process allowed us to re-evaluate design decisions, and identified potential failure sources, such as the cause of the frequent on-board computer reboots.

Subsequently, two colleagues and myself began to develop a mission concept for MOVE-II (NASA Phase A). We worked with researchers from different disciplines and institutions to develop mission variants for several payloads. Initially, we were considering two potential payloads for a 3U CubeSat: a deployable structure setup, or a particle physics payload.

We could inherit many aspects of FirstMOVE’s design, but chose to re-develop the ADCS and OBC architectures, as well as transceivers. When developing the MOVE-II mission and its satellite’s architecture, I began to research on fault tolerance concepts that could be applied to CubeSats such as MOVE-II. We began to implement these, and published our results. This demonstrated our capacity to develop a second satellite, and we finally obtained funding from DLR for MOVE-II’s continued development, a launch slot, and staff salaries for several years.

As one of the main project members, I was fully involved in the development of every part, subsystem, and architecture of MOVE-II. A historic draft is depicted above. Initially, my main responsibility included all computerized subsystems (OBC, COM, EPS, and payload interface). Subteams gradually developed as the project grew. I delegated subsystems where possible until my main responsibility was systems engineering, and management of the on-board computer team, and working on an Xilinx Spartan6-based SoC architecture. Relevant reviews for phase B were successfully passed around early 2015, progressing through phase C and testing several subsystem breadboard setups.

Transceivers prototype for S-Band and UHF/VHF, as well as an early OBC design for MOVE-II were tested as experiment setup during a stratosphere balloon mission, BEXUS 22 (see below). MOVE-II was deployed in LEO on December 3rd, 2018 as part of the rideshare launch “SSO-A: SmallSat Express” with a Falcon 9 rocket, and still operates to date. Based on the success of MOVE-II, additional funding from DLR for a third satellite, MOVE-IIb, was granted in 2017. MOVE-IIb launched in 2019, with my contributions to the satellite’s architecture and the decision to fly a Xilinx Spartan 6 with an FPGA-implement SoC and signal processing chain, among others. Before the launch of MOVE-II, I successfully defended my Master thesis.

Satellite Fault Tolerance

Between 2016 and 2019, I ran a research project on fault tolerant avionics design in the Netherlands funded through an ESA grant. Since the emergence of low-cost COTS-based small satellites, reliability has been one of the main constraints for missions that include such designs. For satellites below 50 kg, few of the traditional ways of achieving fault tolerance can be efficiently applied. Especially for nanosatellites such as MOVE-II, space missions with a prolonged duration today require considerable risk acceptance. When designing MOVE-II’s mission, we realized there were simply no fault tolerance satellite computer architectures that we could use, and no protective concepts that were technically feasible. Since 2014, I have been developing, implementing, and validating fault-tolerance concepts with the aim to close this protective gap using all-COTS technology.

To realize this project, and I started with a scientific theory, did project planning and organization building, and obtained funding for it twice. Since then I went through concept design, validation, and implementation, to proof-of-concept testing and prototype development.

An on-board computer implementing the architecture I developed can be dynamically reconfigured and trade performance at run time, as depicted below. This allows an avionics system to best meet performance requirements during different mission phases or tasks. The architecture supports graceful aging, providing actual fault tolerance, not preventing but adapting to chip level degradation and accumulating permanent faults, even for space missions with a very long duration.

Instead of utilizing classical radiation hardened semiconductors or custom TMRed processor IP, I combine software functionality with architectural and topological design features to achieve fault-tolerance, as shown to the left. Among others, my research combines software-enforced lockstep concepts, distributed decentralized voting, FPGA reconfiguration, component-level self testing, as well as adaptive application scheduling using mixed criticality aspects. Each of these concepts amplifies properties of the other measures used within the system, thereby increasing their collective protective strengths far beyond of what would usually be possible if they were applied independently. My research today has yielded a fault tolerant system architecture which can be implemented with purely commodity hardware, COTS components, standard library IP, for virtually any software and operating system, without vendor lock-in.

As platform for this architecture I developed a strongly compartmentalized MPSoC design for FPGA and ASIC use, which is depicted to the right. Since the development of MOVE-II, I had been doing SoC development already using Xilinx FPGAs, and so I also implemented this MPSoC for a range of series 7, Ultrascale, and Ultrascale plus development boards. The logic placement of one of these designs for a Kintex Ultrascale KU060 FPGA is depicted below. With support from ESA, I was able to obtain access to ARM processor and system IP, and so I designed the entire platform to consider the constraints and capabilities of the Cortex-A53 platform. However, the licensing conditions severely constrained testing and would prevent publication of these designs. Instead I chose to utilize Xilinx Microblaze as well as RISCV in my research, publications, and proof of concept set ups running on development boards. From the start I designed the architecture to be part of a modern, distributed and networked avionics platform such as IMA/ARINC653.

I have been working on this architecture since mid-2015, through a truly interdisciplinary research project. I have developed this architecture from an initial idea into a concise concept which I implemented as a series of breadboard proof-of-concepts, tested, and validated. Since the conclusion of this project, I have begun to combine these proof-of-concepts into a prototype for radiation testing in the future.

A system diagram of this setup is depicted below.

Hardware Development and Testing

I have considerable practical experience with testing, debugging, and analyzing failures in a broad variety of systems at all scales. I have extensive experience testing pure hardware systems, software at the scale of individual applications, protocol stacks, operating systems kernels, and assembly code. Due to my background in IT-security, I have several years of work experience in designing, testing, analyzing critical systems and such that have been subject to attack and infection by malware.

I gathered experience analyzing hardware and software since long before I joined the workforce. I started fixing computers since age 12, and much of my spare time as teenager was dedicated to overclocking and tuning systems and components. Since the mid-2000’s, I have been affiliated Makerspaces, from 2007 to 2011 I was affiliated with MetaLab in Vienna, and since 2019 I have been member of the Tokyo Hackerspace.

From 2002 to 2007, I worked as technical consultant solving hardware and software issues that were too challenging to solve for our corporate customers. My position had a strong emphasis on diagnosing and troubleshooting problems, and repairing a broad variety of hardware and software systems. Disaster recovery was a major aspect in my work, and my expertise was frequently requested for evaluating computer-related insurance cases and, occasionally, legal proceedings. I restored vintage computer systems and proprietary factory control equipment that had failed after decades of use without backup or replacement. Frequently, we were tasked with handling security incidents or solving the fallout created by malware in our client’s systems and networks.

As the pre-existing data center and network infrastructure at Ars Electronica was prone to failure, my initial focus after joining the company in 2007 was on disaster recovery and business continuity planning. Much of my work during the subsequent 2 years there went into the recovering hardware and developing reliable system architectures to replace failed systems. Where this was too risky, unfeasible or uneconomical, I instead introduced fail-over or other fault-tolerance measures to existing systems.

Documenting existing legacy system designs and creating documentation for newly introduced system and network landscapes was an important aspect of my job.

I am also well versed in testing embedded and FPGA-based co-designed systems, and have done this professionally for the past half decade. To do so I have used pretty much every technique imaginable that is economically feasible within an academic environment. I am a frequent user of a broad variety of standard test interfaces and tools, have done fault injection using a variety of measures, and have experience debugging even large software setups.

One of my early contributions to the FirstMOVE satellite was debugging and analyzing issues with its FreeRTOS-based flight software. Since MOVE-II, hardware design has become a large aspect in my work. I have been continuously developing hardware/FPGA-based proof-of-concept designs and testbench setups based on project needs of MOVE-II and for my research. I worked on Spartan6-based SoC architecture for MOVE-II, and developed an autonomous saving subsystem for the spacecraft which is depicted in Figure to the right and below.

To design PCBs for MOVE-II, as well as some of the FPGA-based signal processing toolchain of MOVE-II’s transceivers, me and my colleagues extensively utilized Altium Designer. I explored the use of the Siemens/Mentor application suite for PCB design and netlist simulation. Since early 2019, I have been working with KiCad and Keysight’s Advanced Design System for PCB design. Most of hardware that I have developed was geared towards FPGA test and development, and to support ongoing projects that I was involved in. Examples of utility components that I have built over the past years are depicted below.

These were the first three simple PCB designs I developed. These devices, as well as follow-up designs eventually formed an entire ecosystem of fault-tolerant devices. This device-family can be freely interconnected to enable rapid development of satellite architectures, supporting standard Interfaces such as PCI-Express, Aurora, Phy-less and Phy-driven Ethernet, as well as industry proprietary interfaces such as SpaceWire, ARINC664/AFDX, FlexRay using a set of rapid to develop, low-cost expansion modules.

FPGA Development and Radiation Testing

Over the past years, I have written several medium sized VHDL cores for use with the fault-tolerant OBC architecture I developed. These realize supervisor control and remote debugging of the MPSoC, and communication with a configuration controller implemented in static logic within the FPGA. Since 2014, I have continuously used Xilinx Vivado and ISE as well as the relevant IP libraries including AXI, Microblaze, and network on chip IP.

I was invited into the Xilinx Radiation Test Consortium in 2018, and have been an active contributor since then. The mission of the XRTC is to characterize and mitigate radiation-induced effects in FPGAs, make the relevant radiation test data publicly available and comparable between FPGA generations to increase their use in critical applications. To do so, the XRTC develops and maintains standard test hardware and infrastructure for radiations testing as well as laser fault injection and systematically conducts test campaigns. The consortium consists of new and old space companies and institutions.

Since being an XRTC member, I have contributed to the development of a Xilinx KU060 device test card (DuT), analyzing and reviewing PCB designs, and debugging hardware issues. The KU060 DuT-card went into manufacturing Q1/2020, and is depicted below.

From Q3/2020 to Q1/2021 , we carried out a series of radiation tests. These were focused on characterizing our Xilinx KU060 DuT cards, as well as a a set of latch-up mitigation techniques for the 16nm Xilinx Ultrascale+ family. It is my hope that the resulting data will eventually be released to the public.

System and Network Architecture

When developing MOVE-II’s architecture, I began to apply network and system architecture that I had gained in all my previous projects and jobs. Throughout all the research I did on fault tolerance my broad practical experience has been an invaluable asset. In practice I designed the fault tolerance architecture I developed to be part of AFDX-like avionics setups. Compared to traditional space engineers, system engineers, and avionics designers, I have the capability to bring in concepts from outside of what is considered the traditional way of doing things, and have the ability to learn from experiences made in those fields. This has allowed me to develop genuinely new solutions to challenges that were according to the traditional perception of space systems engineering considered unsolvable.

I have a strong background in network and system architecture, and the design of larger computer setups, considering security and scalability aspects and safety and fault- and failure-tolerance. Already as a teenager, I was involved in organizing LAN parties and other e-sports related events, where I learned how to design high-performance networks efficiently at low cost, as well as event management.

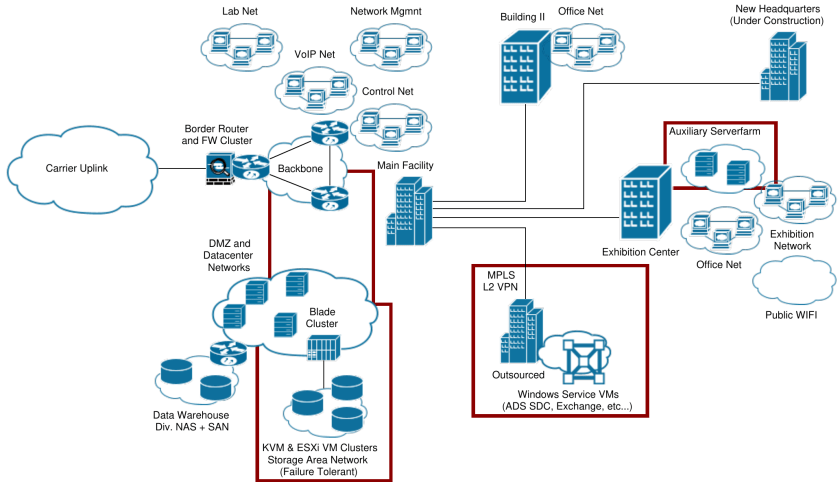

At Ars Electronica, I was responsible for managing, modernizing, and rebuilding Ars Electronica’s network infrastructure, as well as multiple data centers. The IT landscape, depicted below, spanned four main facilities spread across the city of Linz, as well as a new building under construction, and included several smaller locations connected via VPN. Among other activities, I led an effort to completely redesign of the corporate network architecture, making the depicted figure historical and share-able without corporate security implications.

Much of my work during the 2 years I worked for Ars Electronica was dedicated to introducing high availability architectures and fault-tolerance measures, as well as documenting the pre-existing and newly introduced infrastructure. I gradually replaced the server infrastructure at Ars Electronica, and introduced a broad variety of novel concepts such as data-center-grade virtualization and several fault-tolerant cluster designs, one of these is depicted below. Where economical and operationally sensible, I migrated Ars Electronica’s server farm to utilize virtualization based setups running off a high-rel FC-storage network (SAN) to ease service migration away from sometimes old and fragile system setups.

I introduced a new, modern multi-tiered network topology focused on increasing security and reliability, while overall enhancing network performance. I was deeply involved in the planning of the IT and security infrastructure for Ars Electronica’s new headquarters that was then under construction. Over the course of 6 months, we planned central infrastructure elements for this facility, from network design and cable path routing, to the security and safety infrastructure, which included emergency power generator systems, as well as new primary and secondary data center locations. Finally, I contributed to contingency planning for moving an entire data center including most of Ars Electronica’s core service from one facility to another without major service interruptions.

When I worked at the Fraunhofer Institute for Applied and Integrated Security, I developed a fully automated malware collection environment. Together with the IT early warning research group, I then implemented the concept in hardware based on a highly isolated network topology I developed. The setup consisted of four parts: a malware collection environment, a strongly isolated analysis environment, archival categorization and logging facilities, and a strongly isolated crisis intervention and control center. To assure the highest level of isolation between different parts, the individual parts of the architecture were interfaced with an array of firewalls and special purpose security measures. The implementation of this system architecture produced a strongly automated malware collection and analysis environment with considerable safeguards in place to assure side-effect free operation. This enabled the collection, analysis, and classification of massive amounts of potential malicious software and attacker code in bulk and in compliance with all relevant legal requirements. The architecture of this setup is depicted below.

In 2012, I conducted an evaluation of AFDX for Airbus, paying particular attention to its performance and advising on directions for future improvements. I compared its capabilities and performance to other avionics network/interface standards, e.g. MIL-STD-1553, ARINC629/DATAC, and the historical ARINC429. I investigates especially its current limitations and future improvements that should be made, e.g., the use of a fibreoptics instead of a classical copper harness. Large parts of AFDX re-purposed standard COTS technologies, such as IEEE802.3 Ethernet, Quality of Service (IEEE 802.1p), and introduces fault-tolerance as well as hard and soft real-time capabilities. I this project, I leveraged all my previously developed knowledge on network architecture and critical system design for commercial and enterprise-grade hardware, and applied it across research domains. To explore the capabilities and performance of software-emulated AFDX setups, I constructed a network setup from COTS components depicted to the left.

Operating System Software Development

I have been an avid user of Unix-style operating systems including the Linux kernel since the 1990’s. Fresh out of highschool in 2001, two friends and I attempted to develop a geo-information web service. Our aim was to create something that today can best be described as an equivalent to google-maps, or openstreetmap upon the launch of those platforms. This allowed me to deepen my knowledge of Unix-like operating systems and learned to code and debug software the hard way. On the job I learned how not to build complex server setups, and advanced web service software stacks. I became acquainted with the inner workings the Linux kernel, and became familiar with the standard internet server software that even today runs most sophisticated internet services including high-availability and fail-over concepts. I have been building upon this knowledge professionally for almost two decades.

I am an early adopter of enterprise-grade virtualization, and I have been applying it in a business context since its use became economical. In the early 2000s, I began to carry out projects that involved the use of virtualization for testing and recovering failed systems, as well as for IT forensics. With the emergence of hardware-assisted virtualization in x86 systems, I began to design, operate and maintain virtualized server environments. Especially in the early times of x86 virtualization, alterations to virtual machine monitor, hypervisor, and toolchain code were necessary to even create simple system setups. Hence, I gradually started to gain experience OS-level software development and debugging.

At Ars Electronica, I rolled out virtualization at a data center scale. This allowed me to introduced a number of high-availability and fail-safe measures, which were otherwise technically unfeasible outside of the mainframe market. I extensively utilized the KVM and XEN hypervisors for a number of applications that commercial virtualization solutions could not satisfy at that time. These included live-migration of virtual machines, block-device fail-over, and hot-redundancy setups with heartbeat. Later, I refined these setups into truly robust environments such as the ones depicted in the previous sections. At that time, such setups required considerable development effort, and so I began to automatize this setup.

To realize the malware analysis and collection system that I implemented at Fraunhofer (depicted in Figure 16), I again used virtualization and began to work on intrusion detection and security frameworks such as Snort, SELinux, and AppArmor. I worked on several QEMU-based high-interaction honeypots, as well as with a KVM-variant designed for virtual machine introspection on the x86 platform. Part of my work is depicted above, and involved malware obfuscation and protocol analysis through virtual machine introspection, aiming to improve analysis of polymorphic and metamorphic sophisticated malware. In my bachelor thesis I used these technique to identify and emulate services for malware samples, but also to defeat malware analysis as depicted below.

When virtualization extensions were announced for the ARM platform, I led a study on what novel virtual machine introspection functionality could be realized on the ARM platform. I developed a KVM variant that could be used for system-call tracing on ARM systems, specifically focused on mobile-market system-on-chip platforms. The concept is depicted in depicted below, and I realized that the ARM architecture’s privilege isolation concept can be beneficial to robustness of critical systems. Based on this work experience, and the increase in popularity of mobile-market MPSoCs aboard miniaturized spacecraft, I envisioned how software functionality could be used to realize fault-tolerance aboard spacecraft without hardware voting. After several months of further research and implementation work on this idea, I developed an early version of a software-implemented fault-tolerance concept. This is the genesis of my ongoing research on FT avionics computing.

I came into contact with the GNU project and the FSF, thanks to contacts at Ars Electronica and Fraunhofer AISEC. After collaborating with members of the GNUnet core team for a while, working on GNU’s secure network protocol stack, I was employed by the GNU project and stayed with the project for two years. I am member of the foundation that carries and steers GNUnet and its development, and I am one of its founding members. At present, I am still in touch with the project and following it closely, despite my shift focus to spacecraft design. Within GNUnet, I increased my software architecture skills given the size and complexity of the GNUnet code base. This enabled me to actually implement and apply the cryptography network protocol design knowledge gained since apprenticeship and throughout my academic studies. Additionally, I learned to work in a highly structured software-development team, with clearly defined code ownership and enforced coding and documentation standards. I was responsible, among others, for the protocol translation, VPN, and tunnel routing subsystems of the GNUnet stack, and implemented of various cryptosystems including homomorphic encryption protocols. Also, I ported several subsystems to Windows.

My first experiences with debugging avionics related code was working with a software-implementation of the AFDX protocol stack, and diagnosing issues in FirstMOVE’s FreeRTOS-based flight software. After the conclusion of the FirstMOVE mission, I applied my deep knowledge in operating system architecture and development to analyze potential operating system candidates for MOVE-II. My main result from this study was that in theory there is a vast amount of operating systems and RTOS that can be used as base for developing flight software and payload applications. In practice, however, there is only a select handful of properly maintained OS that should be used considering code maturity, quality and availability of documentation, library- and toolchain-support.

Software Fault Tolerance and Security

I have been working on IT security and fault tolerance for over a decade, in both industrial and academic environments. I applied software fault tolerance concepts and developed new concepts to address fault-tolerance, fail-over, safety, and availability challenges that I was dealing with in projects I carried out in the different positions since the mid-2000s. Given that I was dealing with mainly commercial hardware, software fault tolerance often was the only viable way to achieve protective schemes for critical systems. Most of these concepts, developed originally to protect server setups and network services on the ground, can be applied to avionics as well. However, the different operating environments and operational constraints, and fault-profiles must be considered. This is currently not common practice. Neither in scientific research nor in space engineering.

Since 2013, I have been continuously developing new fault-tolerance concepts, as well as re-inventing existing concepts from IT-security and high-rel network architecture to make them usable for avionics design. Significant aspects of IT security management overlap with space system engineering. This includes risk management, disaster recovery, business continuity, and contingency planning. In space systems engineering, these same aspects are considered, though the objective that has to be achieved is different. Hence, security, and space systems engineering approach similar issues in similar manners, and in practice can very well utilize many of the same tools.

Before working on avionics, I gathered considerable experience with applied cryptography in various industrial projects. A significant part of my academic studies was dedicated to research and implementation of cryptosystems, ciphers, erasure codes, and checksum algorithms. When designing MOVE-II’s architecture, I had to protect the integrity of data storage first. I developed and implemented several novel protective concepts to enable storage fault tolerance radiation-soft COTS components with software measures. Among others, I designed and implemented a radiation robust filesystem, a protective concept for flash memory (both depicted below), as well as a flexible software driven memory scrubber for DRAM with commercial ECC. I re-imagined solutions that are actively being used for the purpose of achieving IT or network security and reliability.

The composite erasure coding system that I developed to assure memory integrity for flash memory is depicted below. It was inspired by the signal coding layer used in 10G Ethernet, PCIe to facilitate signal integrity at very high clock frequencies and maximize data transfer rates. Instead, I proposed to apply an LDPC+Reed-Solomon based erasure coding system to safeguard data integrity in modern multi-level cell flash memory, and protect from component faults.

I published the individual concepts in the form of conference papers. Since doing so, SSD manufacturers have begun to adopt this scheme to increase yield, reliability, and lifetime of commercial and industrial grade NAND flash drives and Phase Change Memory products. These papers together with systems engineering and computer architecture work for MOVE2 produced my Master thesis. This research has won several awards, among others from the AIAA.

The emergence of virtualization and software-defined networking architectures in the late-2000s allowed me to drastically expand what I could do with software fault tolerance measures. The set of skills and knowledge developed during this time enabled me to apply them at Ars Electronica to large scale set ups, at a low-cost, and on short notice. Many of the concepts I developed there, I also re-purposed for use in high security environments, such as those encountered at Fraunhofer.

The original idea for the fault tolerant on-board computer architecture I developed in the past years actually originated in setups I had developed for Ars Electronica’s high availability virtualization cluster. I assessed that for nanosatellite applications, building custom fault tolerant processors is a non-solution. Instead, a software-implemented voting concept on a mobile-market MPSoC was feasible with the available technology and within the constraints of a 2U CubeSat.

Initially my concept built upon a COTS quad-core MPSoC running KVM virtual machines and I/O voting, the concept later evolved into an FPGA-based MPSoC architecture consisting of multiple fault-tolerance measures.

Working on the concept depicted above, I eventually achieved a much slimmer, more flexible architecture that could be implemented without proprietary processor cores or virtualization on an FPGA or any ASIC.

A sketch of the originally planned MOVE-II OBC architecture for which this concept is designed is depicted at the beginning of this page. The variant of the software fault tolerance concept I implemented in the past years is depicted below. The system architecture serving as platform for this implementation is described in detail at the start of this page. I designed this architecture from the ground up as part of an integrated modular avionics setup (ARINC651/IMA).

Management and Consulting Experience

Throughout my career in industry and in academic projects, I have been in management and consulting roles. This has allowed me to develop my communication skills. Thus I have learned to collaborate with, consult, manage and supervise a diverse range of people with different backgrounds, technical skills, and experience levels. This has been especially important, considering that I am working and researching in a multi-disciplinary field.

At Ars Electronica, I worked with administrative personnel, artists, scientists, and event staff in order to help make their projects reality.

Part of my job was to provide solutions, advise on technical feasibility, and work around hard technological limitations. Internally, I was the technical expert for network architecture, playing a key role in planning the new, at the time in-construction, headquarters of Ars Electronica.

My responsibilities at Data-Link International initially were consulting, and I gradually took over daily management of the technical department. My department was responsible for consulting corporate clients, providing assistance in trouble shooting, disaster recovery, insurance assessment, and overall technology support where needed. As of 2005, I became head of the department and was responsible for managing a small team.

I also became supervisor and educator for apprentices and interns, while leading my team through the financial difficulties of the company. In the last year of my work at Data-Link International, I worked with and managed employees with disabilities. I learned how to treat all employees, regardless of their background, with dignity and patience, while teaching them technical skills. This was one of the most challenging non-technical experiences I have encountered to this day, besides herding the egos of individual professors at university.

Throughout my academic career, I was involved in organizing academic events for the student population, such as an excursions to data centers. I became one of the main organizers of 3 IT security conferences taking place between 2009 and 2011. In addition, I successfully established projects between university and industry on several occasions. During bachelor studies in Hagenberg, I was an active member of a successful security challenge team, eventually becoming co-organizer. I was also one of the lead developers of MOVE-II, taking a lead role in managing technical teams, supervising students, delegating tasks to meet project milestones, and helping to secure funding. I have supervised student projects, e.g., during the Leiden/ESA Astrophysics Program for Summer Students, and was teaching assistant for Digital Design, and FPGA architecture in 2016 and 2017.

I have worked in a variety of different positions, with a range of responsibilities in several industries, as well as academic environments, in three different countries. My background is diverse, and has been essential for my research I have been doing on satellite design and avionics in the past 8 years. I took up my first job in 2001, and worked ever since, with the sole exception of 1.5 semesters during bachelors, where the academic schedule did not allow even a part time job. I was called to emergency system rescue missions in the middle of the night and on weekends, and spent more time in data centers than I want to remember. I had customers hug me for saving their businesses, cry on my shoulders in relieve, and I had others scream at me in despair.

I went from being a computer enthusiast organizing e-sports events, to being an engineer and manager, to being a researcher and satellite designer, to being a scientist and engineer. Today I am a multi-disciplinary engineer and scientist working on avionics, bringing together concepts, skills, and experience from computer and electrical engineering, physics, and space engineering. I combine academic knowledge with industrial implementation experience to improve spaceflight.